Memory giant Samsung (SAMSUNG) released its revolutionary CXL Memory Module (CMM) roadmap at the 2025 OCP Global Summit, and demonstrated in detail the heterogeneous memory system architecture designed for the next generation intelligent agent (Agen...

Memory giant Samsung (SAMSUNG) released its revolutionary CXL Memory Module (CMM) roadmap at the 2025 OCP Global Summit, and demonstrated in detail the heterogeneous memory system architecture designed for the next generation intelligent agent (Agentic AI) server platform.

Samsung stated that the core of this announcement lies in the continued expansion of the CMM-D series, with the purpose of improving the memory scalability and efficiency of data centers and AI work through CXL technology, and consolidating Samsung's long-term market leadership.

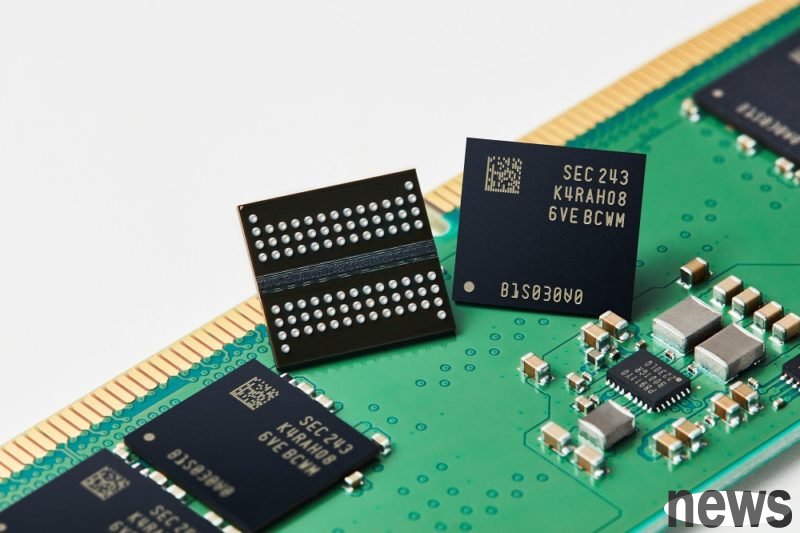

CMM-D moves towards 1TB capacity and 72GB/s bandwidthSamsung is actively expanding its CMM roadmap, especially the CMM-D series, aiming to launch the ultimate version of CMM-D in 2025 to achieve commercialization goals. The CMM-D product series is an important CXL memory expansion solution in the industry, providing scalable, high-capacity memory for data center and AI workload execution.

Samsung pointed out that the current stage is part of CMM-D 1.1, which covers the industry’s first CXL solution and uses open-source firmware. CMM-D 2.0 focuses on building learning platforms and providing comprehensive solutions. Among them, CMM-D 2.0 offers 128GB or 256GB capacity options and supports bandwidths up to 36GB/s. These products comply with the CXL 2.0 standard, use PCIe Gen 5 x8 interface, and support DDR4/DDR5 dual DQS channels. Customer samples are currently being prepared.

As for CMM-D 3.1, Samsung’s goal is to achieve long-term technology development through CMM-D 3.1, including the expected design introduction in 2025. Its capacity can be expanded to an astonishing 1TB, the bandwidth will be greatly increased to 72GB/s, and it supports CXL 3.0, especially for transceiver (Transceiver) technology with longer cable lengths. In addition, the interface has been upgraded to PCIe Gen 6 x8 and continues to support DDR5 dual DQS channels. Additional features of CMM-D 3.1 include ITB and compression FMM engine. The first batch of samples of CMM-D 3.1 will be launched soon.

DCMFM achieves the goal of software-defined memorySamsung also emphasized the goal of software-defined memory through Data Center Memory Fabric Management (DCFMM). Samsung said that as the complexity of data center work execution increases, especially in AI and Kubernetes applications, relying solely on traditional memory configuration methods is no longer enough. Samsung realizes that existing approaches often rely too much on memory usage predictions, resulting in inaccurate resource predictions, over-commitment, and difficulty knowing exactly how much memory resources an application requires.

To address these challenges, Samsung launched the Data Center Memory Fiber Management (DCFMM) system as an example of its CMS software solution. The key element of its DCMFM architecture can provide centralized memory configuration management for memory allocation and deallocation. The system is composed of the DCMFM Manager responsible for memory allocation management, and the DCMFM Agent responsible for processing memory allocation requests from the Manager. The DCFMM Monitor is used to observe allocated/terminated memory requests.

With the above architecture, Samsung emphasizes that DCMFM can be seamlessly integrated with Kubernetes, providing topological views of CXL devices, switches and hosts, and supporting resource decoupling/redistribution, covering direct connection mode and pooling mode. In addition, through CMS S/W (DCFMM), Samsung demonstrated an example of dynamic memory resizing to deal with the problem of memory exhaustion. The result of this solution is that it can run on the latest vanilla X86, Linux and CXL 2.0 server CPUs. Moreover, it also has a direct pooling function, which is implemented on the LD unit through VNNP to PPM bridging technology.

{twenty two}Samsung demonstrated a hardware architecture reference design combining CXL Switch and CMM-D. Among them, in the CXL memory pooling setting, the host (Host) is connected to multiple CMM-D modules through CXL Switch. This is in contrast to the traditional solution (Host connects to CMM-D through Rcvd/Tx Switch).

The need for software-defined memory is urgentAccording to cloud service data, only 10% of provisioned CPU resources in AWS, Azure and Google Cloud will be utilized in 2023. This highlights the huge waste in resource allocation in data centers, because memory resources are usually provisioned together with the CPU. Through CXL technology, remote access significantly improves memory utilization and reduces latency and overhead compared to traditional solutions. Utilization of provisioned CPUs is expected to rise from 13% in 2023 to 23% by 2025.

Overall, Samsung has provided a comprehensive heterogeneous memory system architecture for the next generation AI server platform by combining its high-capacity, high-bandwidth CMM-D hardware roadmap and advanced DCFMM software management system. This not only solves the current problem of low cloud resource utilization, but also brings significant performance improvements and TCO optimization to data centers and AI workloads.